Abstract

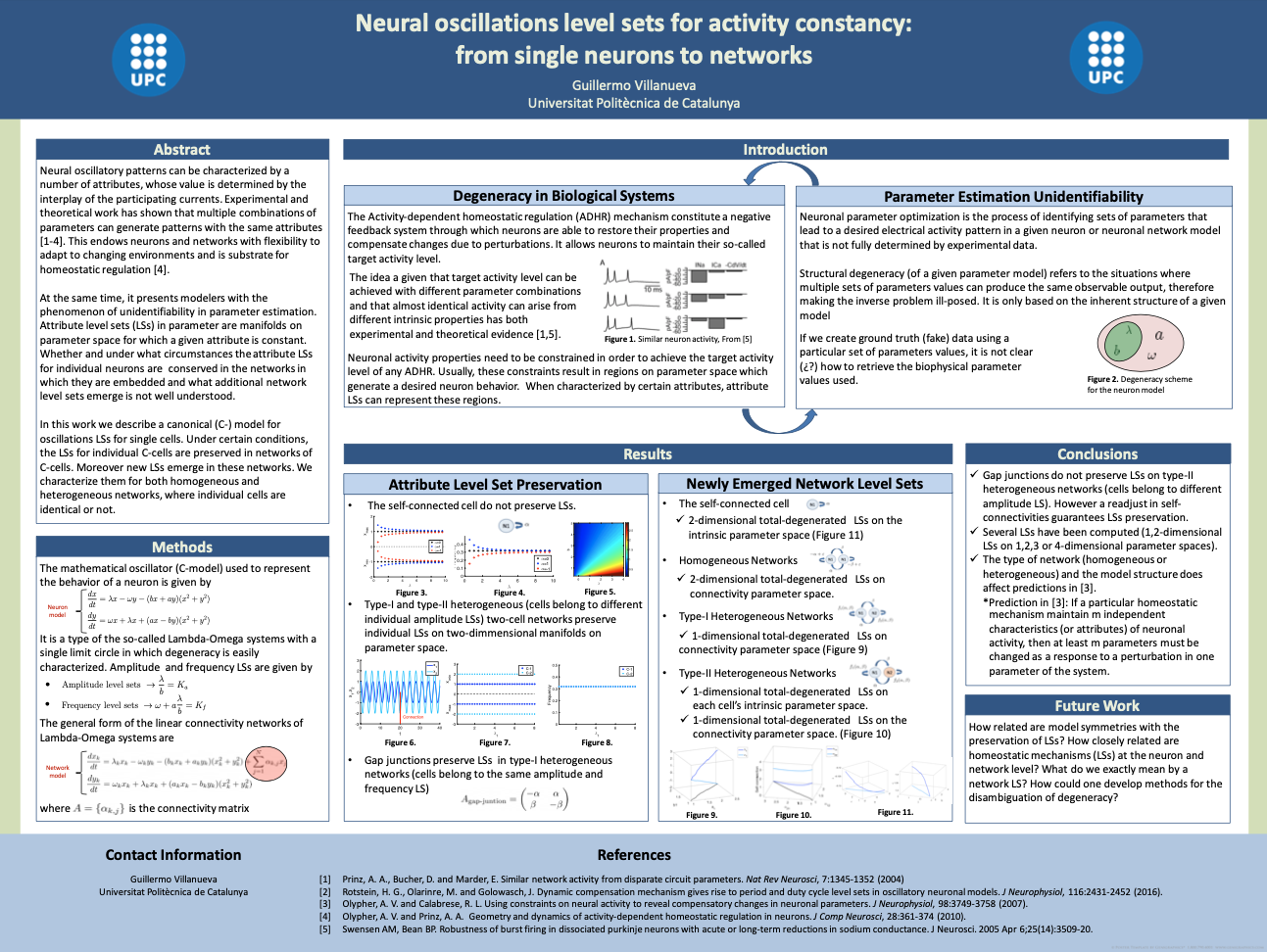

Neural oscillatory patterns can be characterized by a number of attributes such as frequency, amplitude, duty cycle, characteristic transition times between silent and active phases, and number of spikes per burst. The value of these attributes are determined by the interplay of the participating currents and, for the appropriate currents, can be captured the maximal synaptic conductances. Experimental and theoretical work has shown that multiple combinations of parameters can generate patterns with the same attributes. This endows neurons and networks with flexibility to adapt to changing environments and is substrate for homeostatic regulation. At the same time, it presents modelers with the phenomenon of unidentifiability in parameter estimation. Attribute Level sets (LSs) in parameter space are curves (surfaces or hypersurfaces) joining parameter values for which a given attribute is constant. Typically, but not always, LSs are attribute-dependent. In previous work it has been characterized the dynamic compensatory mechanisms leading to the generation activity-attribute LSs in realistic models for single neurons. Whether and under what circumstances the attribute LSs for individual neurons are conserved in the networks in which they are embedded and what additional network level sets emerge is not well understood.

In this work we describe a canonical (C-) model for oscillations LSs for single cells exhibiting a wide range of realistic neuronal oscillatory patterns. The model is canonical in the sense that all attributes share the same LS (the oscillations are identical along LSs) and can be considered as an idealization of the familiar, conductance-based two-dimensional models. A systematic symmetry breaking in the C-model leads to the familiar phase-plane diagrams for neuronal oscillations and to the separation of LSs for different attributes. The LSs for individual C-cells are preserved in networks of C-cells connected via gap junctions where all cells belong to the same LS, but are not necessarily identical. In contrast, LSs are not preserved for excitatory or inhibitory networks, except for certain connectivity patterns for which the model symmetries are maintained. However, new level sets emerge in these networks. We characterize them in terms of the single cell LSs and the connectivity parameters for both homogeneous and heterogeneous networks where individual cells are identical or not, respectively, within the considered LS.

Background and Motivation

Cellular homeostasis mechanisms allows a neuron to maintain a functional target pattern in the presence of changes in their membrane properties and electrical activity. Homeostatic regulation is thus associated with stable neuronal activity which enables the brain with the necessary reliability in order to perform their functions properly. By contrast, the capabilities of the brain to learn, store memory and adapt to changes in the environment are associated with certain network plasticity which lead to changing and dynamical synapses between neurons. Together with cellular homeostasis mechanisms, it has been suggested some synaptic homeostasis mechanisms, such as synaptic scaling, which prevent the network to become instable and, at the same time, they allow plastic changes [5].

Neuronal properties need to be constrained in order to achieve the target neural behavior of any cellular homeostasis mechanism. Usually, these constraints result in regions on parameter space which generate a desired neuron behavior. When this target activity level is characterized by certain attributes, attribute LSs can represent such regions. The fact that a wide range of parameter combinations can generate the same neuron output make neurons more robust to perturbations.

The idea that target activity levels can be achieved with different parameters combinations, instead of having to tune parameters to specific unique values has experimental evidence [1,5,6]. Moreover the so-called animal-to-animal parameter variability [7], which consist on parameter differences on a given neuron type from animal to animal, also supports the idea.

Furthermore, neural parameter seems not to vary independently among different solutions on parameters space for the same neural target, but it seem there are dependencies among parameters producing parameter correlations [8].

Parameter variability must be taken into account on model construction, when it is intended to replicate experimental observations. Since only a small fraction of neuron properties are experimentally available, experimentally non accessible parameters need to be determined and fit to experimental data. The ability of the models to make predictions and to provide mechanistic explanations depends on the reliability of this process.

Neuronal parameter optimization is the process of identifying sets of parameters that lead to a desired electrical activity pattern in a neuron or neuronal network model that is not fully constrained by experimental data [10]. In order to perform this process, a large number of parameter estimation techniques (PE) and tools are available to scientist, which involve hand- tuning, optimization methods, such as the gradient method, or parameter space explorations techniques [9]. A key feature of these methods is a measure, which indicates how well the model is able to produce the desired electric target. Parameter sets which acceptably reproduce the desired electrical activity are called the solutions for the optimization problem. When there are multiple solutions for an optimization problem, the set of solution is known as the “solution space” of the problem.

The fact that the target activity level of a neuron is presumably an electrical activity pattern rather than specific set of parameters on a given model give rise to the degeneracy problem. Degeneracy refers to the situations where multiple sets of parameters values can produce the same observable output, therefore making the inverse problem ill-posed, i.e., determining the model parameters from observable experimental data is not a well-defined problem.

Structural identifiability [11,12], refers to the problem of whether it is possible to uniquely determine the unknown model parameters from experimental data. Several techniques are available for analysis of structural identifiability, specially in linear ODE models [13]. Furthermore more recent methods have been proposed for general non-linear ODE models [14]. Although the increasing difficulty of these methods as models become more complex, the structural identifiability analysis should be investigated in any parameter identification problem.

All considered, the biological consequences of homeostatic regulation mechanisms, not only at the neuron level, but also at a network level involving synaptic connection, make the model construction task quite challenging. On the one hand, the physiological parameter variability must be reflected in the model. From a mathematical perspective, attribute level sets must show properties in accordance to the biology beyond these mathematical structures. The study of attribute level sets in single neuron and network models try the shed light on this issue. On the other hand, having to tune model parameters to reflect realistic cellular and network behavior based on experimental attribute measurements, seems to be quite challenging taking into account the presence of degeneracy in biological systems.

Significance

Some parameter estimation techniques, such as parameter space exploration techniques, are aware of the structural unidentifiability of neuron and network models and focus on finding regions on parameter space with desired neuron or network activity. Those model scan the parameter space producing the so-called “model databases” [15], which provide valuable information of how parameters could be homeostatically regulated in order to produce a target activity. When target behavior is characterized by the value of certain attributes, attribute level sets (LSs) appear. They represent point on parameter space for which a given attribute is constant, i.e., manifolds on parameter space preserving a given attribute value. If a neural or network activity target is characterized by several attributes values, the intersection of their corresponding manifolds represent the region on parameter space preserving all attributes values characterizing the activity target.

Among the disadvantages of these method, it is computational cost and the exponentially increase in number of simulations as the parameter space is extended, which is usually required in neural networks. Moreover methods are mainly qualitative. In this respect, the study of basic level sets properties in simple single neural and network models can help in the understanding of the degeneracy problem.

Project Description

The goal of the project is to study how individual neuron LSs are behaved when cells become part of a network. We ask whether and under what conditions individual attribute LSs are preserved in a network structure and how new network level sets emerge.

An oversimplified single neuron model is used to represent the behavior of a single cell. Characterizing degeneracy is this simple model we are able to find conditions on connectivity parameter space for the preservation of individual level sets. When these conditions are not guaranteed, new network level sets emerge on parameter spaces which might involve both connectivity and intrinsic parameters from single cells. We consider networks with different degree of complexity based on individual intrinsic parameters, distinguishing between homogenous and heterogeneous networks and compare LSs properties between them.

Overview

-

Background: Theoretical background on computational neuroscience in the context of this work is presented. The section gives an overview of basic single neuron and synaptic dynamics model. Furthermore some mathematical models for neuronal networks are presented. Some concepts are illustrate on a biophysical network model of the basal ganglia.

-

Previous Work: Review of previous work related with attribute level sets. Two papers and one PhD rotation project are summarized and discussed.

-

Methods: This section introduces the single neuron model, as well as, the type of networks considered in this work. Furthermore, degeneracy is characterized in this single neuron model. In addition, important concepts for following sections are defined.

-

Results: Results are presented. They include conditions for the preservation of level sets in two-cell network and a systematic qualitative study of the newly emerged network level sets both in the self-connected cell and the two-cell network.

-

Discussion: Final summary of the work, in which results are discussed and new lines of research are mentioned.

You can download the thesis here.

References

[1] Prinz, A. A., Bucher, D. and Marder, E. Similar network activity from disparate circuit parameters. Nat Rev Neurosci, 7:1345-1352 (2004)

[2] Rotstein, H. G., Olarinre, M. and Golowasch, J. Dynamic compensation mechanism gives rise to period and duty cycle level sets in oscillatory neuronal models. J Neurophysiol, 116:2431-2452 (2016).

[3] Olypher, A. V. and Calabrese, R. L. Using constraints on neural activity to reveal compensatory changes in neuronal parameters. J Neurophysiol, 98:3749-3758 (2007).

[4] Olypher, A. V. and Prinz, A. A. Geometry and dynamics of activity-dependent homeostatic regulation in neurons. J Comp Neurosci, 28:361-374 (2010).

[5] Prinz A.A. Stability and plasticity in neuronal and network dynamics. Prinz, A. A. (2008). 16 - Stability and Plasticity in Neuronal and Network Dynamics (I. Soltesz & K. B. T.-C. N. in E. Staley (eds.); pp. 247–VIII). Academic Press.

[6] Marder, E., & Goaillard, J.-M. (2006). Variability, compensation and homeostasis in neuron and network function. Nature Reviews Neuroscience, 7(7), 563–574.

[7] Prinz, Astrid & Smolinski, Tomasz & Hudson, Amber. (2011). Understanding Animal-to-Animal Variability in Neuronal and Network Properties. The Dynamic Brain: An Exploration of Neuronal Variability and Its Functional Significance.

[8] Khorkova, O., & Golowasch, J. (2007). Neuromodulators, Not Activity, Control Coordinated Expression of Ionic Currents. The Journal of Neuroscience, 27(32), 8709 LP – 8718.

[9] Schutter, D. (2013). Computational Modeling Methods for Neuroscientists. In Computational Modeling Methods for Neuroscientists.

[10] Astrid A. Prinz (2007) Neuronal parameter optimization. Scholarpedia, 2(1):1903.

[11] Bellman, R., & Åström, K. J. (1970). On structural identifiability. Mathematical Biosciences, 7(3–4), 329–339.

[12] E. Walter (Ed.) Identifiability of Parametric Models. Pergamon, Oxford, 1987.

[13] K. R. Godfrey & J. J. DiStefano. Identifiability of Model Parameters, . Pergamon, Oxford, 1987.

[14] Miao, H., Xia, X., Perelson, A. S., & Wu, H. (2011). On identifiability of nonlinear ODE models and applications in viral dynamics. SIAM Review, 53(1), 3–39.

[15] Computational exploration of neuron and neural network models in neurobiology. In: Crasto C.J., ed. Methods in Molecular Biology: Bioinformatics. Humana Press, Totowa NJ: 167-179.